When Galileo supported the Heliocentric model of the solar system through observation, the Vatican clergy forced him to recant his findings - all just because they believed the Earth to be the center of the universe. Galileo famously said ‘Eppursimuove’ (Italian - ‘and yet it moves’) in disapproval. What he meant was that regardless of any of their beliefs, or him retracting his findings, the Earth still moved about its orbit. This is an old example of science denialism and its futility in the broadest sense – because we currently know that Galileo, not the Vatican, was correct. A lot more has been updated since then in the field of astronomy, but science-denial and distrust of scientists is still a major problem today, reflected by a significant number of people. The psychology behind it remains the same as for the disapproving Vatican clergy who accused Galileo of heresy. As much as the scientific process is borne out of human rationality, distrust of science can be said to be borne out of our irrationality; more specifically - errors of reasoning.

To understand why many people are so readily dismissive of various scientific discoveries or facts, we definitely have to understand what science is and why it is so hard for so many of us to grasp it. This is especially relevant during this pandemic; because as much as we can observe how effective science has been in preventing worst-case scenarios, we can also see how denial of scientific findings, mistrust of scientists in favor of conspiracy theorists is spreading faster than the virus itself. Much like how the Earth keeps orbiting the sun, apathetic to our denial, the virus keeps doing what it has evolved to do – replicate to infect as many people as possible. For humans, science is the best and only method we have to understand this pandemic and fight it. There is no viable alternative yet. Denying science obviously threatens public health and human progress itself.

Problems of complexity

No matter where you are from or whatever your affiliations, this pandemic surely has gotten to you in one way or another. The world may suffer from an economic depression worse than that of 1930, SARS-CoV-19 vaccine looks distant, and lockdown and physical distancing seem like the only viable solution at our disposal. In the face of all these, it is very natural for people to be afraid, adopt a defensive stance, and to distrust authorities. Science-denialism also arises from defensive psychology because to understand scientific literature requires rigorous training – which only a few dedicated and motivated individuals can achieve. The rest of us may feel left out and have no choice but to trust these elites for important decisions. This may informally project an authoritative picture unto the entire field of science - where knowledge only flows from one kind to the rest. Assumption of a hidden agenda may then give rise to numerous conspiracy theories. Combine this with the fact that people become more defensive and make emotional decisions during crisis – we have a perfect recipe for any anti-science movement.

Current scientific knowledge is inconceivably vast, so much so that it is almost impossible for an individual to absolutely master any one field. This is one reason why we hear more about ‘teams’ of scientists as opposed to individuals discovering something groundbreaking in the 21st century. Of course, individual contribution is not yet dead, but an individual is majorly limited. Scientific nuances demand plenty of resources, which is not always possible for an individual or a small lab to attain or manage alone. It requires funding and more people. This is an inevitable problem of modern economics, politics, and the growing complexities of scientific findings themselves. For this reason, we have plenty of areas of specializations – where a small number of highly trained and skilled individuals choose to study one aspect of a certain field in depth in order to become experts. Take the field of infectious disease itself: gone are the days of Louis Pasteur when one scientist could alone pose as an authority. There are microbiologists, clinicians, public health scientists, molecular biologists, pharmacists, nanotechnologists, biomedical engineers who all contribute to their field in their own nuanced ways. These days the field of HIV/AIDS is broader than the entire field of medicine during antiquity – as there are specialists from different fields of science who dedicate their entire professional lives to study just this subject. So for those of us who are not aware about such intricacies and are not trained in any field of science, it might seem too esoteric and overwhelming to comprehend – and people usually trust only the things they can understand. To want to take charge of a certain situation themselves is human nature, whatever the intention. It is thus not surprising why people who are not the least bit scientifically literate are the ones who fall victim to this mentality that denies the validity and credibility of the entire scientific process.

What do we mean by ‘science’?

Science is a process. It is not even a body of knowledge. The scientific process updates and optimizes human knowledge. This involves identifying a problem in our surrounding, forming an initial assumption as to what could be the reason behind it, and coming up with a practical experiment where we observe in an organized way to test whether our initial assumption was right or wrong. If it was right, we then try to check how our process may have gone wrong and where our psychological biases may have influenced our results. If our assumption was wrong, we try to learn what went wrong and how reasonable the experiment to test that assumption will be in the future, learn from it and try refining our initial assumption itself. The goal of the scientific process is not to ‘prove’ our initial assumption but to ‘disprove’ them to get closer toward an unbiased fact with each try.

I have carefully used the word ‘closer’ to indicate that despite the use of the efficient scientific process, we may never really get to the truth itself. We make the best approximation of the truth and that is a limitation brought about by the boundaries of technology, perception, and psychology. This initial assumption is known as the‘hypothesis’. Our hypothesis becomes a ‘theory’when it becomes very hard to disprove. A theory is something that is tested and verified. We should not confuse these two terms. For example, the idea of gravitational waves used to be a hypothesis postulated by Albert Einstein. After testing the concept through the LIGO experiment in 2016, it was so hard to disprove the hypothesis that it became a theory. Gravitational waves are a reality hard to dismiss for now. This is unlike the idea of multiverse – which is a hypothesis not yet confirmed through experiment.

In simple words, science is just a problem-solving tool at our disposal – much like a hammer. We use the hammer to solve the problem of nailing or breaking something. Most of the time we successfully nail a spike or a peg onto a surface, but sometimes it misses and bashes our fingers instead. Sometimes the peg will not go in as expected. We learn from the failings and seek to make a better hammer, or use technology to reduce our work – perhaps invent and use an electric hammer or a nail gun – which are more efficient than a traditional hammer for numerous reasons. Likewise, the scientific process keeps updating information and keeps getting more efficient with each iteration. When we say ‘best science’ in writing, we actually mean ‘best till now’. Some newer areas of knowledge can change drastically as the gaps are plenty (for example COVID-19 research), while older areas of knowledge are harder to change due to fewer gaps in comparison. The more we find out about something the more we know about it, but we also simultaneously broaden the horizon of unknown problems. The scientific process is not flawless, but it is the best system we currently have. We have a duty to improve the process without denying it – because the reality is that, only the scientific process can improve itself.

Science and pseudoscience

Just because science has its practical limitations, we cannot assume anything extraordinary as being true without using science. Creative thinking (out-of-box thinking) is paramount to formulating groundbreaking new ideas and hypotheses, but they should be sound and reasonable than random and disorganized. Because reasonable hypotheses have a greater chance of passing the scientific test. This is where I think pseudoscience and anti-science lose credibility – the proponents argue based on unreasonable premises and unorganized hypotheses. We can thus say with great confidence that they are incorrect and therefore impractical.

When any experimental model is unlikely to get the results it promises – it does not make sense why we should keep on spending energy and resources into it. Credible scientific journals have something called ‘peer-review’ or ‘refereeing’ that exists to check for validity and cogency of proposed ideas. These systems exist to filter unsound information from sound ones and to check whether someone’s experiment or idea was faulty or not. Without proper peer-review, we would not have a standard to judge between accurate and inaccurate information. This is crucial because it is human nature to be biased and to make mistakes regardless of the amount of training – which begs for a foolproof system of checks and balances. Biases are not abnormal; they are natural errors of reasoning which only more training can reduce. Even the most polished papers can have subtle biases – one reason why we do not trust people in place of the process.

Scientific papers have transparent disclaimers and display of methods for this very reason. Now other independent and neutral scientists or institutes can try to replicate their experiment to check if it works or not. If people follow our methods, they should get the same or similar results given that they are sound – we call this replicability – an essential quality of the scientific process. We thus know whether someone is mistaken or not. Pseudoscientific and anti-science blogs and portals however, never really ensure any of these standards. As a result it makes sense for serious scholars and academics to dismiss anything that do not adhere to basic scientific standards – something that the proponents of pseudoscience frequently complain about.

Pseudoscience and anti-science

Below are some popular examples of pseudoscientific information or practices:

- Calls to drink cow urine to cure or prevent COVID-19 by popular godmen, priests, and gurus like Ramdev.

- Use of homeopathy or unsound herbal concoctions to make sanitizers or medicines against the virus (eg. Arsenic Album 30C).

- Some forestry chemist from Nepal mixing random fluids to create ‘Herbal Vaccines’ against COVID-19.

- Claims of 5G technology causing COVID-19 despite lack of evidence.

- Nation heads suggesting random medical drugs like hydroxychloroquine as being effective before the results of serious experiment.

- Christian pastors using faith-healing techniques to fight COVID-19.

- Media celebrity-doctors like Dr. Oz in the United States and Dr. Sundar Mani Dixit in Nepal proposing ineffective solutions to prevent COVID-19 infections.

- Conspiracy theories like the documentary “Plandemic” blaming vaccines, Bill Gates and China for the pandemic.

Pseudoscience usually overtakes the discourse much to the detriment of human progress - credible websites only get a few thousand hits, but pseudoscientific and conspiracy websites get millions. Its proponents usually have double standards or none at all. They are severely marred by confirmation biases – affirm only the information that support and reject those that challenge their deeply held beliefs. In short, they tend to cherry-pick information that suits them. Absence of critical thinking skills and lack of scientific rigor makes it easier for us to fall into this trap of misinformation. Pseudoscience includes easily understandable but erroneous premises and propagates in an attention-grabbing and sensationalist manner. This also explains why it is so easy for it to spread as opposed to actual science – which is hard to understand despite being correct – and usually requires expertise to do so. The end-effect is mass confusion and definitely harm.

Anti-science differs from pseudoscience in terms of intention. While not everything that is pseudoscientific is borne out of ill intention, anti-science always has a malicious motive. It could be religious fanaticism, political dogmatism, financial incentives, or a simple desire for popularity or fame. When science rejects their agenda, anti-science proponents tend to dismiss the mainstream scientific consensus and dishonestly churn up counter-productive conspiracy theories, movements or ideas that have no basis in reality.

Some examples of anti-science are:

- Climate change denying lobbyists and politicians funded by fossil fuel corporations.

- Hindutva proponents like Yogi Adityanath, Ramdev, Ravi Shankar and Jaggi Basudev who try to belittle scientific advancements in order to promote their own opinions, political agenda, or products.

- Young-Earth creationist Christians who actively deny the fact of evolution in order to promote their God’s mandate.

- Documentaries falsely declaring this pandemic as a conspired plan despite COVID-19 originating organically from wildlife.

- Authoritative nations pushing conversion-therapy to ‘cure’ homosexuals and transgender individuals.

Scientists are individuals with their own families, hobbies, and lives. They have their own conscience and dedicate their entire lives to solve problems and help humanity. They are mostly never a part of an organized conspiracy group trying to overthrow a certain culture or the world-order like in the movies. Anti-science conspiracy theories and movements are unfair toward these people and harmful in general as they misdirect public interest toward less productive areas. This is even more so when rich celebrities, media and corporate moguls share false information from their half-baked and ill-informed opinions, which unfortunately have great reach.

Spreading misinformation usually spreads negative news – which is termed ‘negative framing’. Negative news tend to grab people’s attention more quickly and research shows that when unaware people are constantly exposed to negative framing, they tend to get defensive and adopt emotional reasoning to make important decisions which generally lead to poor consequences. They may also fall victim to something called “optimism bias” – when we belittle scientific warnings, people will wrongly assume that they will not contract the disease and tend to violate stay-at-home orders and physical distancing – risking more contagion and endangering everyone else. One famous example is the denial of HIV/AIDS by the then Thabo Mbeki government in South Africa. They denied anti-retroviral treatment (ART) to HIV-positive pregnant women which resulted in more than 300,000 lives being harmed.

Growing inaccessibility of scientific literature

Another major reason why distrust in science is growing could be because of the hard-to-get and hard-to-understand nature of scientific literature itself. The most credible and well-respected medical or scientific journals are usually restricted by expensive pay-walls and the public has to rely on secondary information from science-journalists who usually have various incentives to communicate only the information they deem fit – as is the nature of their job – or do not thoroughly understand science. Adding paywall to science-publishing ecosystems will simply restrict public access – even for scientifically literate people and independent researchers like myself who would benefit immensely from the literature. This could eventually lead to the perception of scientific journals being purely profit-driven and thus being counterproductive to distribution of information and transparency – which could instead reinforce the affirmations of conspiracy theorists and science deniers.

In the 21st century, the scientific community has long recognized this problem and there is currently an immense effort to establish open-access publications, i.e. free from paywall. Some people may argue that maintaining scientific journals is expensive and resource-intensive, as the referees need compensation for their work and other logistical expenses. However, the major publishing companies thriving on a profit-driven model makes this argument less compelling. Majority of research and development is paid for in one way or the other by tax-payer money and yet only 15-25% of all scientific research is currently open-access - this argument looks strong in favor of advocating for open-access science.

Another solution is for scientific literature to include lay summaries in simple jargon-free language for the common people to understand. Relying on journalists to translate the information and not the original researchers themselves, will create rooms for misinterpretation and miscommunication of crucial concepts. This strategy in combination with open-access online publishing might help make science a little more accessible to the public and adhere to the ethics of freedom of information. Such efforts may help mitigate some mistrust of science, but will not eliminate it altogether. Some people will still choose to deny the facts regardless of access – the goal should be to make them less appealing to unaware people.

‘Eastern’ vs ‘Western’ science

Considering Asia (especially South Asia in my experience), distrust of science can be traced back to the historical context of imperialism. Most Asian nations went through tough periods of colonization by western powers for a couple of centuries until the 20th. Their western conquerors inadvertently introduced the subcontinent to better science but they also applied their knowledge to maintain their power and economies unethically. Having experienced this as a society, many people here tend to dismiss mainstream science as an encroaching “western” concept in favor of an “eastern”one, which they consider benign. Such people usually have their identities and dignities tied to this broad historical context and thus refuse to accept the fact that modern science has no dichotomy and belongs to all of humanity regardless of historical or cultural achievements. The truth is that any idea that is rigorously tested to be true will automatically fall under the realm of science, no matter where they come from.

Popular godmen, new-age Hindu evangelists, and traditional medicine practitioners tend to be very vocal about how “western science” is invading the lives of humankind and how their own “eastern science” has lost significance. They tend to dismiss the obvious and greater benefits that have resulted from the use of scientific methods (such as astronomy and evidence-based medicine), only highlight the demerits of modernity (e.g. disadvantages of industrialization or side effects of manufactured drugs), and exaggerate the latter to gain support among their followers and fans. Rejecting any scientific findings or data that disagree with their world-views and agreeing with only those that complement their beliefs is an essential characteristic of science-denial based on this east-west dichotomy.

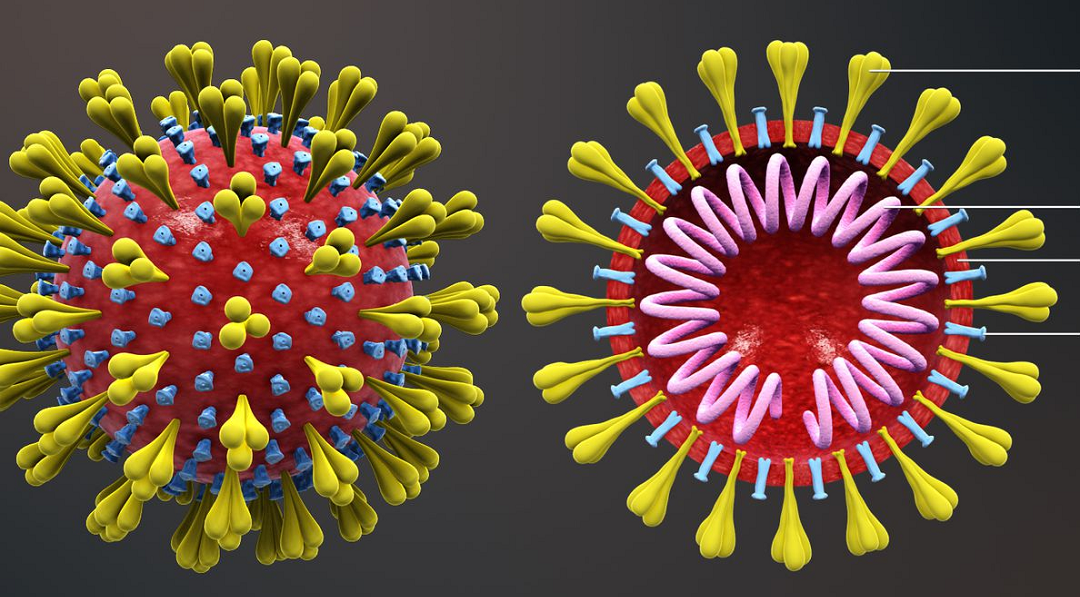

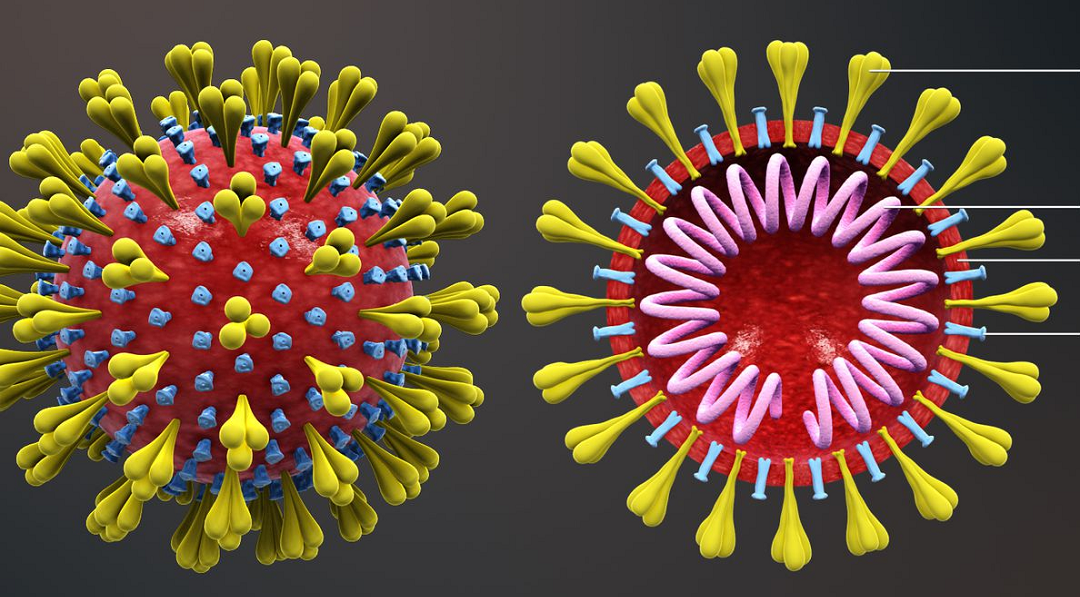

As COVID-19 poses a great challenge for modern science, this type of cultural denial of science has been flourishing on social media. People have gone so far as to propose cow-urine as a means to prevent infection – rationalizing their claims with an appeal to their own culture in denial of credible information. This is probably because people have witnessed how this pandemic crippled even the supposedly well-equipped industrial nations and their science – forgetting that it actually allowed scientists to sequence the genome of the virus and release its full morphology in the first place. They also tend to bypass the fact that the very concept of social distancing, lockdown and basic hand hygiene are all human discoveries through scientific and mathematical models. This kind of denial masquerading as a cultural inferiority complex is severely counterproductive – especially for developing nations. Moreover, to worsen it all, less aware and scientifically illiterate people tend to be more prejudiced and xenophobic during a pandemic compared to ones that are more scientifically literate – and this can be dangerous in many ways. Unless we improve the critical thinking elements in our regional curriculum and make people aware of the philosophy of science from an early age, such mentality will only end up delaying progress where needed.

Where do we go from here?

Denial of scientific facts is rooted in human psychology – especially if we are untrained. It can definitely challenge scientists and policymakers in many ways. I am sure I still have not done justice to this seemingly broad topic, which has its own dedicated experts. However, if you ask me, I would say that we should definitely start fighting this denial by enforcing and measuring basic scientific literacy in every level of education. Nations around the world measure their literacy rates all the time, but I think it would be worthwhile if we could simultaneously measure mathematical dexterity and basic scientific literacy – so that we could derive a roadmap for us to improve our scientific education.

The media and social media companies should ethically evaluate the harms of spreading misinformation and pseudo-scientific propaganda and probably label them accordingly or publish them along with some kind of a warning – like in dubious Wikipedia articles and cigarette packets. Communication strategies must be balanced so that they do not cause too much fear and too much optimism bias. Science should be more accessible and publications funded through a better scheme so that they can sustain unbiased refereeing without turning away independent researchers, enthusiasts or institutions.

There are obviously many ways to mitigate people’s distrust of science, and they should be both-top down and bottom-up. In short, as much as it is the responsibility of governments and authorities to fight such harmful mentality, it is paramount that we also individually arm ourselves against it by honing our critical thinking skills and basic scientific literacy. Imagine if the world had double the number of scientifically literate people as it has today then how easy it would have been to curtail this infection before it became a serious pandemic. Do we even want to live in such a world? If the answer is yes, then we should start with solidifying trust in the scientific method. There is, sadly, no other way.

(The author is an incoming neurology resident at Detroit Medical Center, Michigan/Wayne State University)